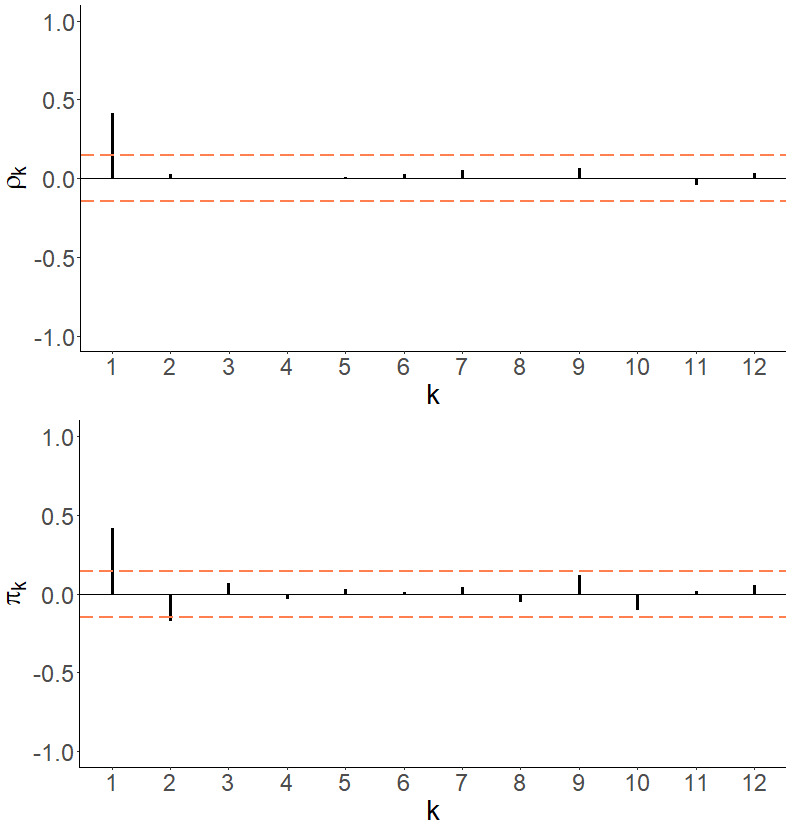

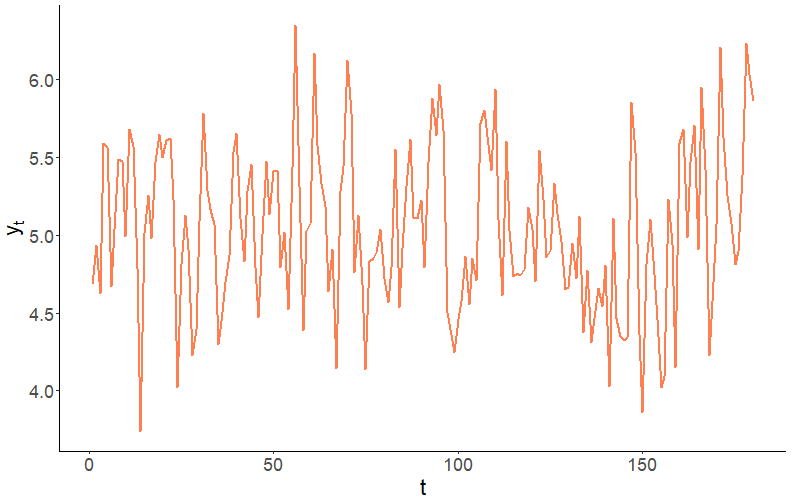

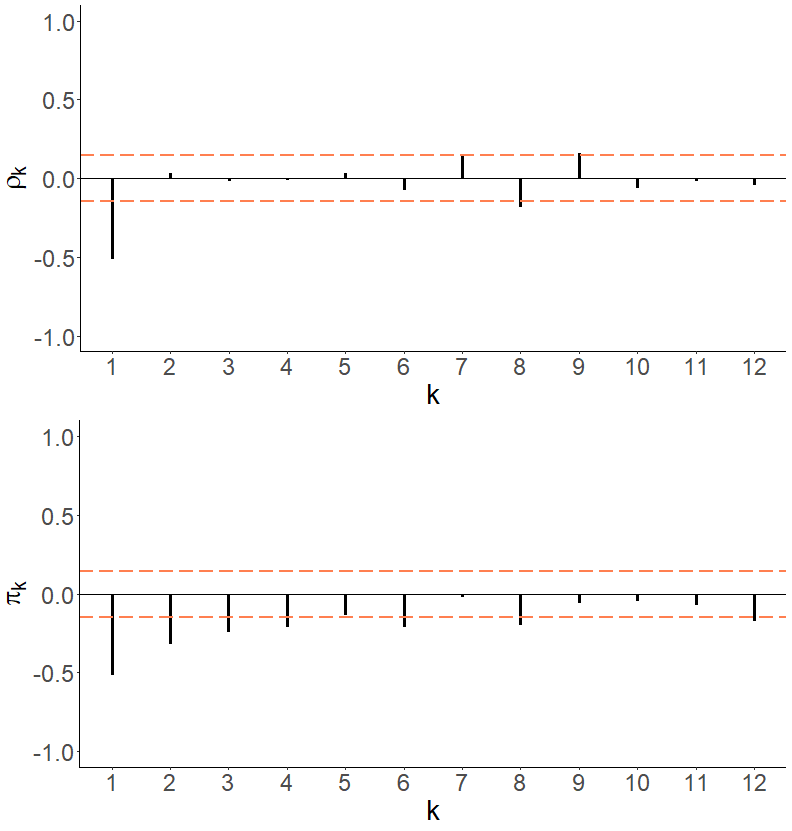

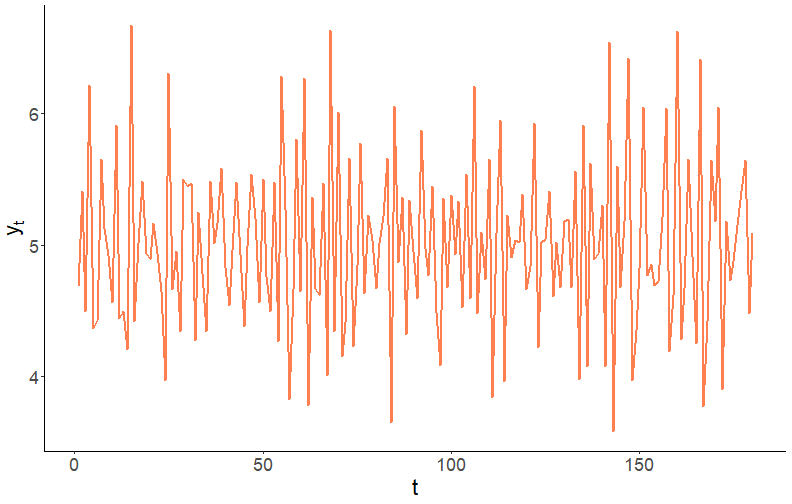

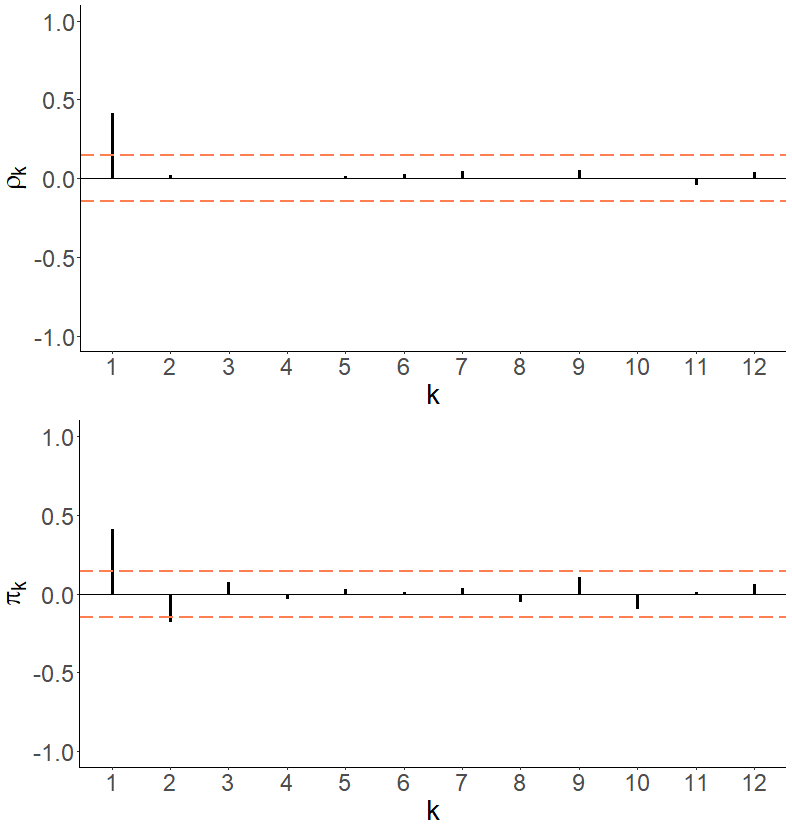

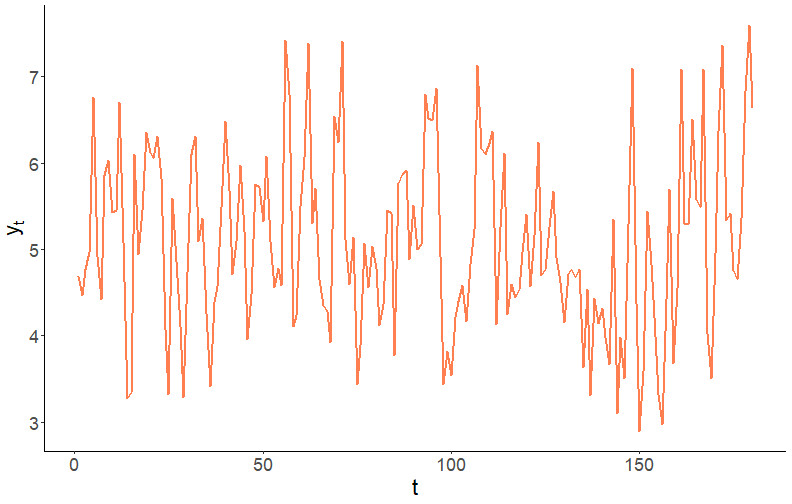

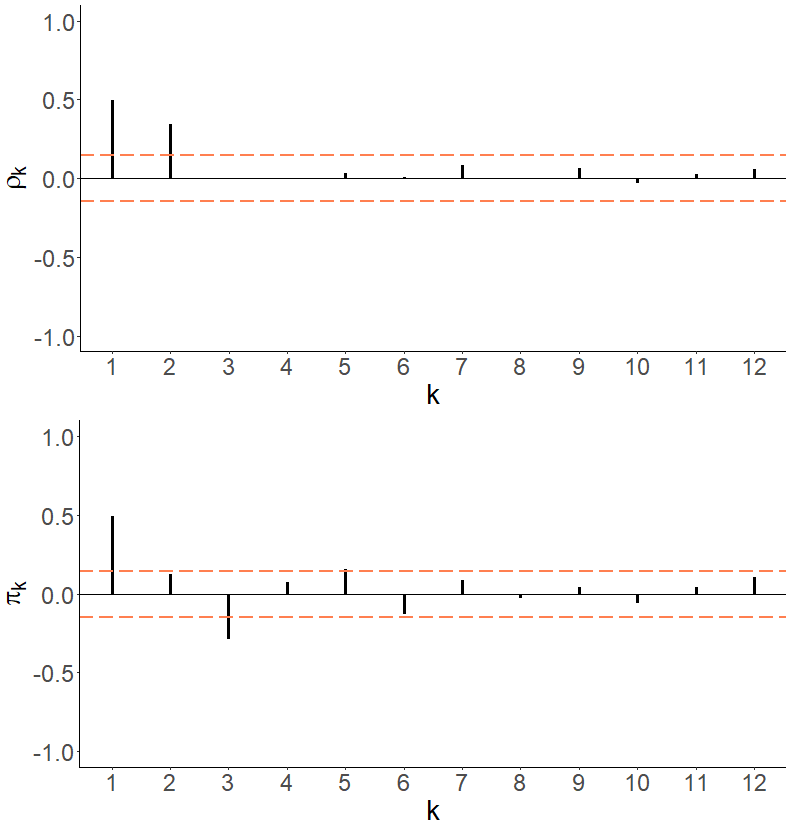

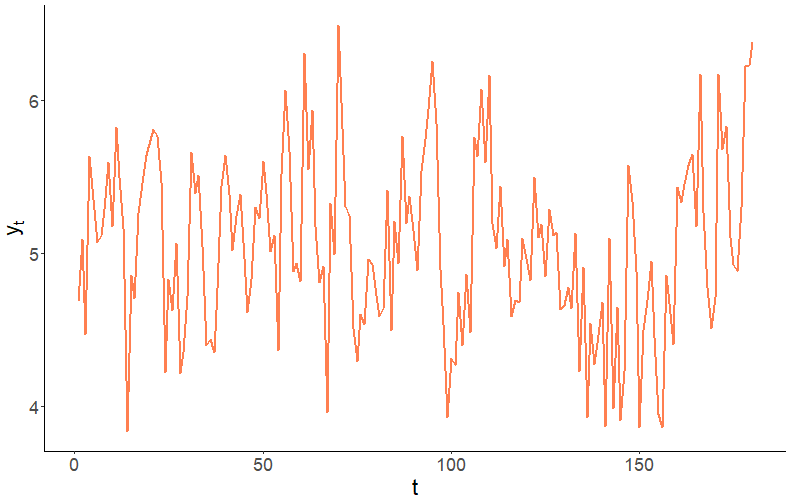

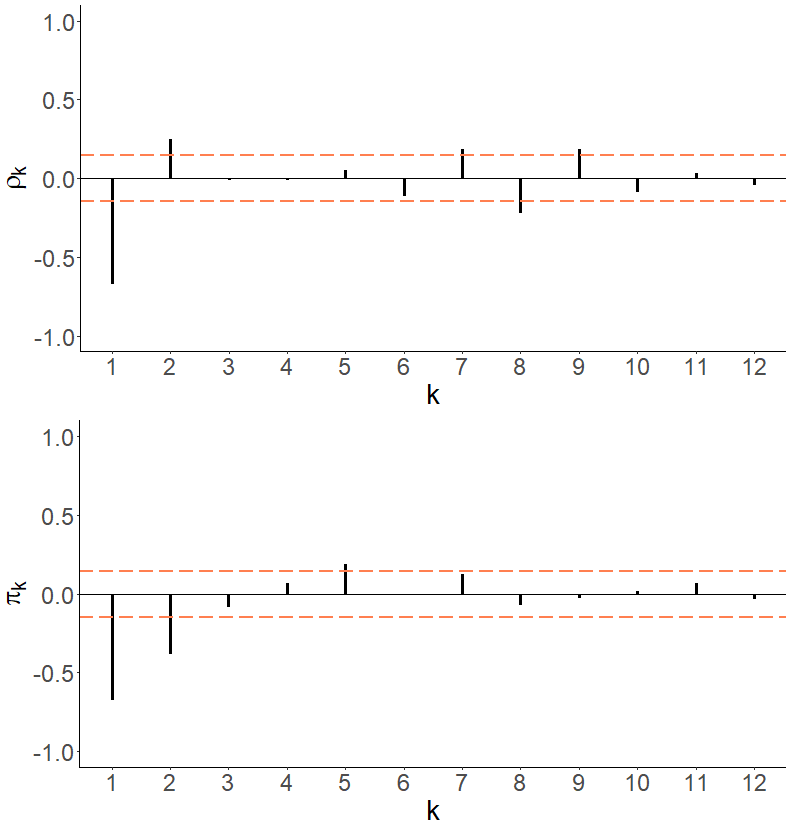

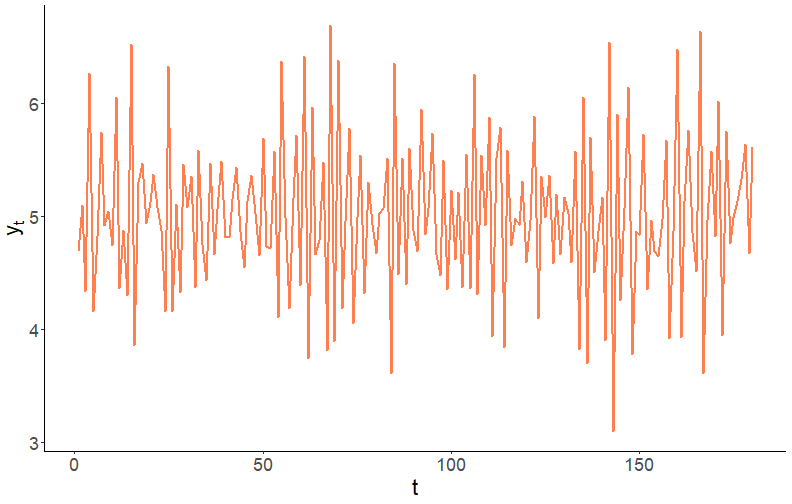

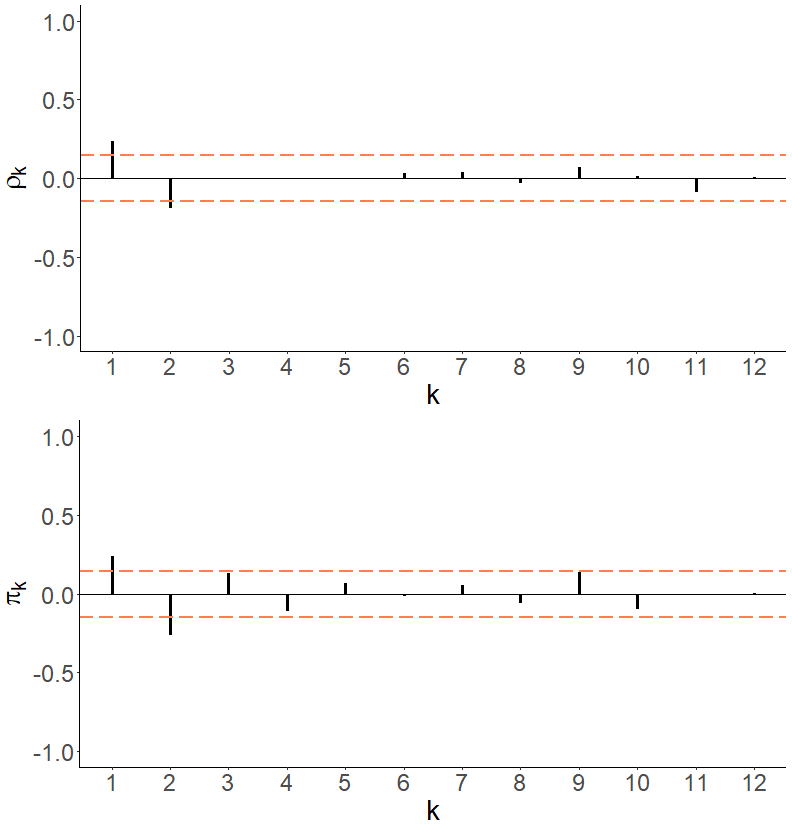

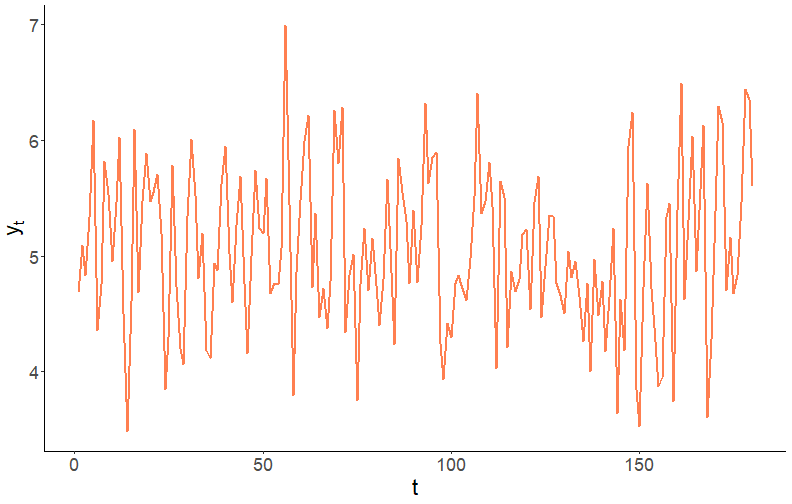

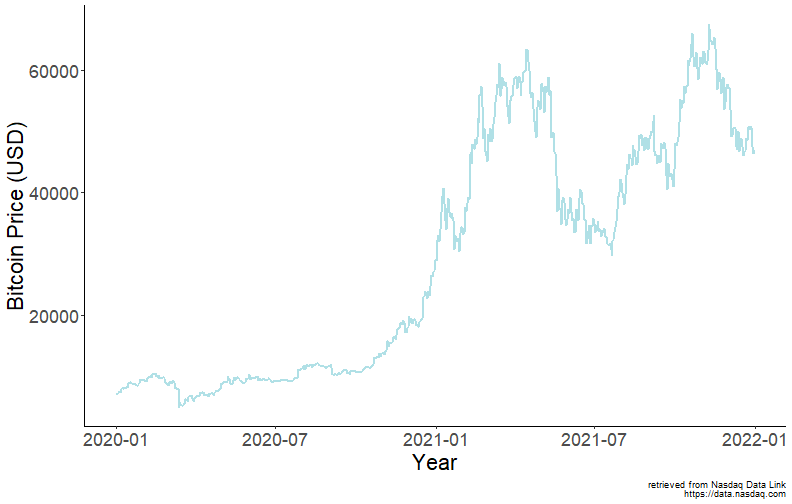

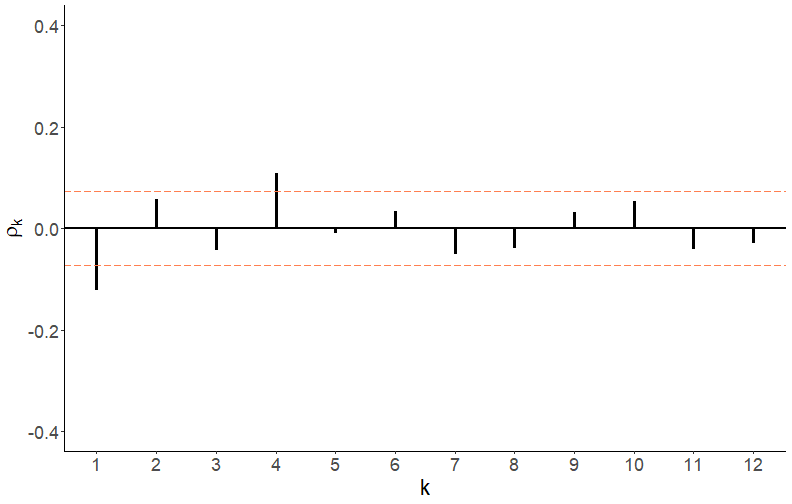

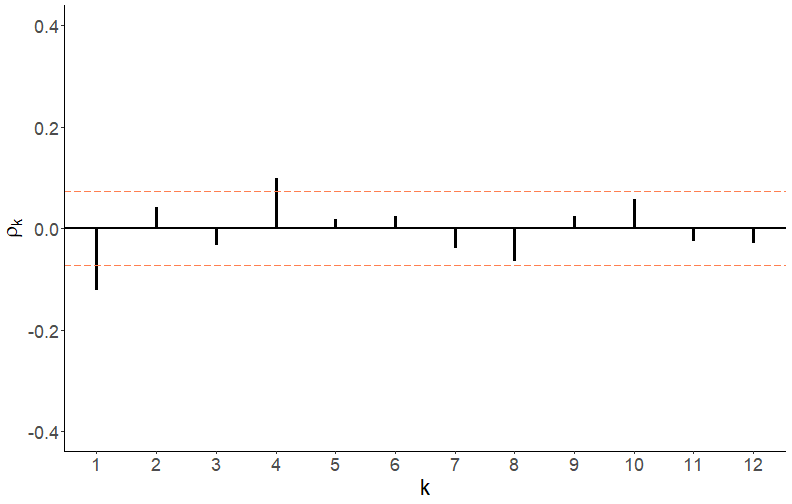

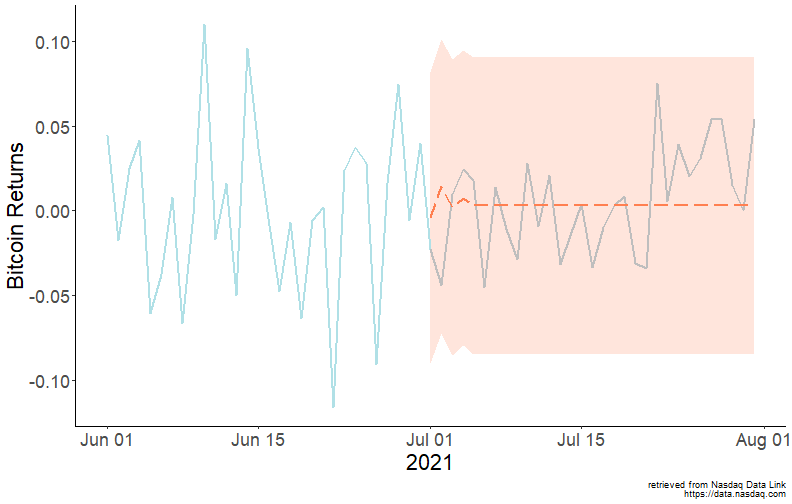

class: center, middle, inverse, title-slide .title[ # Forecasting for Economics and Business ] .subtitle[ ## Lecture 6: Moving Average ] .author[ ### David Ubilava ] .date[ ### University of Sydney ] --- # Wold Decomposition .pull-left[  ] .pull-right[ It is possible to decompose any covariance stationary autoregression into a weighted sum of its error terms (plus a constant). For example, using recursive substitution we can represent an `\(AR(1)\)` process as: `$$y_t = \alpha + \beta y_{t-1} + \varepsilon_t = \frac{\alpha}{1-\beta} + \sum_{i=0}^{\infty}\beta^i\varepsilon_{t-i}$$` This is known as the *Wold decomposition*. ] --- # Wold Decomposition .right-column[ Specifically, the *Wold Decomposition Theorem* states that if `\(\{Y_t\}\)` is a covariance stationary process, and `\(\{\varepsilon_t\}\)` is a white noise process, then there exists a unique linear representation as: `$$Y_t = \mu + \sum_{i=0}^{\infty}\theta_i\varepsilon_{t-i}$$` where `\(\mu\)` is the deterministic component, and the rest is the stochastic component with `\(\theta_0=1\)`, and `\(\sum_{i=0}^{\infty}\theta_i^2 < \infty\)`. This is an infinite-order moving average process, denoted by `\(MA(\infty)\)`. ] --- # First-order moving average .right-column[ What does a time series of a moving average process looks like? To develop an intuition, let's begin with a `\(MA(1)\)`: `$$y_t = \mu + \varepsilon_t + \theta\varepsilon_{t-1},\;~~\varepsilon_t\sim iid~\text{N}\left(0,\sigma^2_{\varepsilon}\right)$$` Suppose `\(\mu = 5\)`, and `\(\sigma_{\varepsilon}=0.5\)`, and let's simulate time series by setting `\(\theta\)` to `\(0.5\)`, `\(-1\)`, and `\(2\)`, respectively. ] --- # First-order moving average .pull-left[ <!-- --> ] .pull-right[ <!-- --> ] --- # First-order moving average .pull-left[ <!-- --> ] .pull-right[ <!-- --> ] --- # First-order moving average .pull-left[ <!-- --> ] .pull-right[ <!-- --> ] --- # First-order moving average .right-column[ Several features of interest are apparent: - only one spike at the first lag (i.e., only `\(\rho_1 \neq 0\)`), the remaining autocorrelations are zero; - the size of the spike is directly proportional to the size of `\(\theta\)`, for `\(|\theta| < 1\)`; - the sign of the spike is the same as that of `\(\theta\)`. ] --- # First-order moving average .right-column[ The unconditional mean of the series is equal to its deterministic component, `\(\mu\)`. That is: `$$E(y_t) = E(\mu + \varepsilon_t + \theta\varepsilon_{t-1}) = \mu$$` The unconditional variance is proportional to `\(\theta\)`. `$$Var(y_t) = E(y_t - \mu)^2 = E(\varepsilon_t + \theta\varepsilon_{t-1})^2 = (1+\theta^2)\sigma^2_{\varepsilon}$$` <!-- - From the above, the mean and variance of the process are time-invariant. --> ] --- # First-order moving average .right-column[ The first-order autocovariance: `$$\gamma_1 = E\left[(y_t - \mu)(y_{t-1} - \mu)\right] = E\left[(\varepsilon_t + \theta\varepsilon_{t-1})(\varepsilon_{t-1} + \theta\varepsilon_{t-2})\right] = \theta\sigma^2_{\varepsilon}$$` The first-order autocorrelation: `$$\rho_1 = \frac{\gamma_1}{\gamma_0} = \frac{\theta}{1+\theta^2}$$` The time-invariant autocovariance, in conjunction with time-invariant mean and variance measures, suggest that MA(1) is a covariance stationary process. ] --- # First-order moving average .right-column[ Note that the two cases, `\(\theta=0.5\)` and `\(\theta=2\)` produce identical autocorrelation functions (and that `\(\theta=0.5\)` is the inverse of `\(\theta=2\)`). This can be generalized to any `\(\theta\)` and `\(1/\theta\)`. That is, two different MA processes can produce the same autocorrelation. But only that with the moving average parameter less than unity is *invertible*. Invertability of a moving average process is a useful feature as it allows us to represent the unobserved error term as a function of past observations of the series. ] --- # Second-order moving average .right-column[ The foregoing analysis of a MA(1) process can be generalized to any MA(q) process. Let's focus on MA(2), for examle: `$$y_t = \mu + \varepsilon_t+\theta_1\varepsilon_{t-1}+\theta_2\varepsilon_{t-2}$$` Suppose, as before, `\(\mu = 5\)`, and `\(\sigma_{\varepsilon}=0.5\)`, and let's simulate time series by setting `\(\{\theta_1,\theta_2\}\)` to `\(\{0.5,0.5\}\)`, `\(\{-1,0.5\}\)`, and `\(\{2,-0.5\}\)`, respectively. ] --- # Second-order moving average .pull-left[ <!-- --> ] .pull-right[ <!-- --> ] --- # Second-order moving average .pull-left[ <!-- --> ] .pull-right[ <!-- --> ] --- # Second-order moving average .pull-left[ <!-- --> ] .pull-right[ <!-- --> ] --- # Second-order moving average .right-column[ The unconditional mean and variance are: `$$E(y_t) = E(\mu + \varepsilon_t+\theta_1\varepsilon_{t-1}+\theta_2\varepsilon_{t-2}) = \mu$$` and `$$Var(y_t) = E(y_t-\mu)^2 = (1+\theta_1^2+\theta_2^2)\sigma_{\varepsilon}^2$$` ] --- # Second-order moving average .right-column[ The autocovariance at the first lag is: `$$\gamma_1 = E[(y_t-\mu)(y_{t-1}-\mu)] = (\theta_1+\theta_1\theta_2)\sigma_{\varepsilon}^2$$` and the corresponding autocorrelation is: `$$\rho_1 = \frac{\gamma_1}{\gamma_0} = \frac{\theta_1+\theta_1\theta_2}{1+\theta_1^2+\theta_2^2}$$` ] --- # Second-order moving average .right-column[ The autocovariance at the second lag is: `$$\gamma_2 = E[(y_t-\mu)(y_{t-2}-\mu)] = \theta_2\sigma_{\varepsilon}^2$$` and the corresponding autocorrelation is: `$$\rho_2 = \frac{\gamma_2}{\gamma_0} = \frac{\theta_2}{1+\theta_1^2+\theta_2^2}$$` The autocovariance and autocorrelation at higher lags are zero. In general, for any `\(MA(q)\)`, `\(\rho_k=0,\;\;\forall\; k>q\)`. ] --- # Bitcoin prices (avg. across major exchanges) .right-column[ <!-- --> ] --- # ACF of Bitcoin daily returns .pull-left[ Autocorrelations at lags one and four appear statistically significant. ] .pull-right[ <!-- --> ] --- # PACF of Bitcoin daily returns .pull-left[ Partial autocorrelations, also, statistically significant at lags 1 and 4. ] .pull-right[ <!-- --> ] --- # Order selection using information criteria .pull-left[ |q |AIC |SIC | |:--|:-----|:-----| |1 |0.259 |0.271 | |2 |0.262 |0.281 | |3 |0.264 |0.289 | |4 |0.254 |0.286 | |5 |0.259 |0.297 | |6 |0.262 |0.306 | |7 |0.263 |0.313 | ] .pull-right[ AIC suggests `\(MA(4)\)` while SIC suggests `\(MA(1)\)`. Going with MA(4), the estimated parameters and their standard errors are: | | `\(\mu\)` | `\(\theta_1\)` | `\(\theta_2\)` | `\(\theta_3\)` | `\(\theta_4\)` | |:--------|:-----:|:----------:|:----------:|:----------:|:----------:| |estimate | 0.003 | -0.114 | 0.045 | -0.021 | 0.115 | |s.e. | 0.002 | 0.037 | 0.037 | 0.036 | 0.038 | ] --- # One-step-ahead point forecast .right-column[ One-step-ahead point forecast: `$$y_{t+1|t} = E(y_{t+1}|\Omega_t) = \mu + \theta_1 \varepsilon_{t}$$` One-step-ahead forecast error: `$$e_{t+1|t} = y_{t+1} - y_{t+1|t} = \varepsilon_{t+1}$$` ] --- # One-step-ahead interval forecast .right-column[ One-step-ahead forecast variance: `$$\sigma_{t+1|t}^2 = E(e_{t+1|t}^2) = E(\varepsilon_{t+1}^2) = \sigma_{\varepsilon}^2$$` One-step-ahead (95%) interval forecast: `$$y_{t+1|t} \pm 1.96\sigma_{\varepsilon}$$` ] --- # h-step-ahead point forecast (h>q) .right-column[ h-step-ahead point forecast: `$$y_{t+h|t} = E(\mu + \varepsilon_{t+h} + \theta_1 \varepsilon_{t+h-1} + \cdots + \theta_q \varepsilon_{t+h-q}) = \mu$$` h-step-ahead forecast error: `$$e_{t+h|t} = y_{t+h} - y_{t+h|t} = \varepsilon_{t+h} + \theta_1 \varepsilon_{t+h-1} + \cdots + \theta_q \varepsilon_{t+h-q}$$` ] --- # h-step-ahead interval forecast (h>q) .right-column[ h-step-ahead forecast variance: `$$\sigma_{t+h|t}^2 = E(e_{t+h|t}^2) = \sigma_{\varepsilon}^2(1+\theta_1^2+\cdots+\theta_q^2)$$` h-step-ahead (95%) interval forecast: `$$\mu \pm 1.96\sigma_{\varepsilon}(1+\theta_1^2+\cdots+\theta_q^2)$$` ] --- # Forecasting Bitcoin daily returns using MA(4) .right-column[ <!-- --> ] --- # Readings .pull-left[  ] .pull-right[ Gonzalez-Rivera, Chapter 6 Hyndman & Athanasopoulos, [9.4](https://otexts.com/fpp3/MA.html) ]