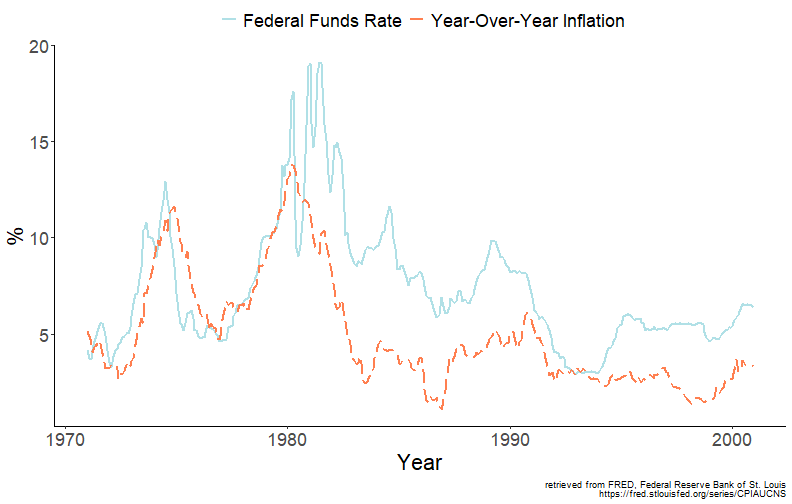

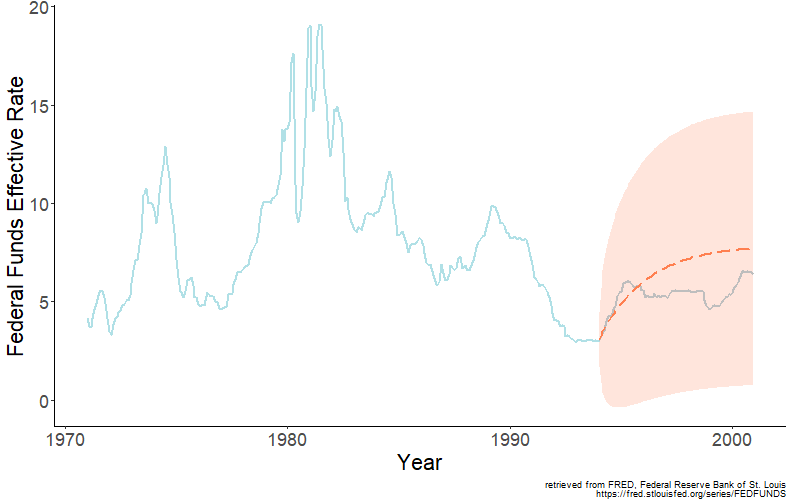

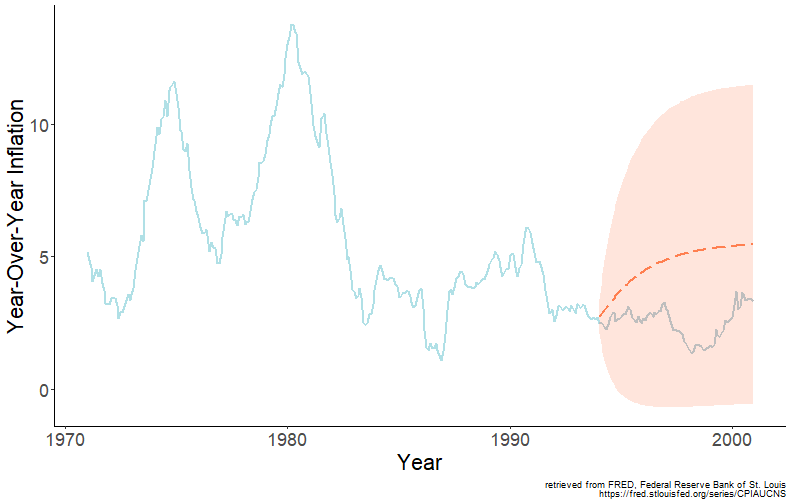

class: center, middle, inverse, title-slide .title[ # Forecasting for Economics and Business ] .subtitle[ ## Lecture 7: Vector Autoregression ] .author[ ### David Ubilava ] .date[ ### University of Sydney ] --- # Economic variables are inter-related .pull-left[  ] .pull-right[ Economic variables are often (and usually) interrelated; for example, - income affects consumption; - interest rates impact investment. The dynamic linkages between two (or more) economic variables can be modeled as a *system of equations*, better known as a vector autoregression, or simply VAR. ] --- # Vector autoregression .right-column[ To begin, consider a bivariate first-order VAR. Let `\(\{X_{1t}\}\)` and `\(\{X_{2t}\}\)` be the stationary stochastic processes. A bivariate VAR(1), is then given by: `$$\begin{aligned} x_{1t} &= \alpha_1 + \pi_{11}x_{1t-1} + \pi_{12}x_{2t-1} + \varepsilon_{1t} \\ x_{2t} &= \alpha_2 + \pi_{21}x_{1t-1} + \pi_{22}x_{2t-1} + \varepsilon_{2t} \end{aligned}$$` where `\(\varepsilon_{1,t} \sim iid(0,\sigma_1^2)\)` and `\(\varepsilon_{2,t} \sim iid(0,\sigma_2^2)\)`, and the two can be correlated, i.e., `\(Cov(\varepsilon_{1,t},\varepsilon_{2,t}) \neq 0\)`. ] --- # Vector autoregression .right-column[ An `\(n\)`-dimensional VAR of order `\(p\)`, VAR(p), presented in matrix notation: `$$\mathbf{x}_t = \mathbf{\alpha} + \Pi^{(1)} \mathbf{x}_{t-1} + \ldots + \Pi^{(p)} \mathbf{x}_{t-p} + \mathbf{\varepsilon}_t,$$` where `\(\mathbf{x}_t = (x_{1,t},\ldots,x_{n,t})'\)` is a vector of `\(n\)` (potentially) related variables; `\(\mathbf{\varepsilon}_t = (\varepsilon_{1,t},\ldots,\varepsilon_{n,t})'\)` is a vector of error terms, such that `\(E\left(\mathbf{\varepsilon}_t\right) = \mathbf{0}\)`, `\(E\left(\mathbf{\varepsilon}_t^{}\mathbf{\varepsilon}_t^{\prime}\right) = \Sigma_{\mathbf{\varepsilon}}\)`, and `\(E\left(\mathbf{\varepsilon}_{t}^{}\mathbf{\varepsilon}_{s \neq t}^{\prime}\right) = 0\)`. ] --- # A parameter matrix of a vector autoregression .right-column[ `\(\Pi^{(1)},\ldots,\Pi^{(p)}\)` are `\(n\)`-dimensional parameter matrices such that: `$$\Pi^{(j)} = \left[ \begin{array}{cccc} \pi_{11}^{(j)} & \pi_{12}^{(j)} & \cdots & \pi_{1n}^{(j)} \\ \pi_{21}^{(j)} & \pi_{22}^{(j)} & \cdots & \pi_{2n}^{(j)} \\ \vdots & \vdots & \ddots & \vdots \\ \pi_{n1}^{(j)} & \pi_{n2}^{(j)} & \cdots & \pi_{nn}^{(j)} \end{array} \right],\;~~j=1,\ldots,p$$` ] --- # Features of vector autoregression .right-column[ General features of a (reduced-form) vector autoregression are that: - only the lagged values of the dependent variables are on the right-hand-side of the equations. * Although, trends and seasonal variables can also be included. - Each equation has the same set of right-hand-side variables. * However, it is possible to impose different lag structure across the equations, especially when `\(p\)` is relatively large. This is because the number of parameters increases very quickly with the number of lags or the number of variables in the system. - The autregressive order, `\(p\)`, is the largest lag across all equations. ] --- # Modeling vector autoregression .right-column[ The autoregressive order, `\(p\)`, can be determined using system-wide information criteria: `$$\begin{aligned} & AIC = \ln\left|\Sigma_{\mathbf{\varepsilon}}\right| + \frac{2}{T}(pn^2+n) \\ & SIC = \ln\left|\Sigma_{\mathbf{\varepsilon}}\right| + \frac{\ln{T}}{T}(pn^2+n) \end{aligned}$$` where `\(\left|\Sigma_{\mathbf{\varepsilon}}\right|\)` is the determinant of the residual covariance matrix; `\(n\)` is the number of equations, and `\(T\)` is the total number of observations. ] --- # Estimating vector autoregression .right-column[ When each equation of VAR has the same regressors, the OLS can be applied to each equation individually to estimate the regression parameters - i.e., the estimation can be carried out on the equation-by-equation basis. When processes are covariance-stationarity, conventional t-tests and F-tests are applicable for hypotheses testing. ] --- # U.S. Interest Rates and Inflation .right-column[ <!-- --> ] --- # U.S. Interest Rates and Inflation: VAR(3) .pull-left[ <table class="myTable"> <thead> <tr> <th style="text-align:left;"> p </th> <th style="text-align:left;"> AIC </th> </tr> </thead> <tbody> <tr> <td style="text-align:left;"> 1 </td> <td style="text-align:left;"> -2.892 </td> </tr> <tr> <td style="text-align:left;"> 2 </td> <td style="text-align:left;"> -3.189 </td> </tr> <tr> <td style="text-align:left;"> 3 </td> <td style="text-align:left;"> -3.244 </td> </tr> <tr> <td style="text-align:left;"> 4 </td> <td style="text-align:left;"> -3.208 </td> </tr> </tbody> </table> ] .pull-right[ <table class="myTable table" style="font-size: 22px; margin-left: auto; margin-right: auto;"> <thead> <tr> <th style="text-align:left;"> </th> <th style="text-align:right;"> `\(\alpha_i\)` </th> <th style="text-align:right;"> `\(\pi_{i1}^{(1)}\)` </th> <th style="text-align:right;"> `\(\pi_{i1}^{(2)}\)` </th> <th style="text-align:right;"> `\(\pi_{i1}^{(3)}\)` </th> <th style="text-align:right;"> `\(\pi_{i2}^{(1)}\)` </th> <th style="text-align:right;"> `\(\pi_{i2}^{(2)}\)` </th> <th style="text-align:right;"> `\(\pi_{i2}^{(3)}\)` </th> </tr> </thead> <tbody> <tr grouplength="2"><td colspan="8" style="border-bottom: 1px solid;"><strong>Interest Rate (i=1)</strong></td></tr> <tr> <td style="text-align:left;padding-left: 2em;" indentlevel="1"> estimate </td> <td style="text-align:right;"> 0.193 </td> <td style="text-align:right;"> 1.429 </td> <td style="text-align:right;"> -0.651 </td> <td style="text-align:right;"> 0.174 </td> <td style="text-align:right;"> 0.199 </td> <td style="text-align:right;"> -0.311 </td> <td style="text-align:right;"> 0.147 </td> </tr> <tr> <td style="text-align:left;padding-left: 2em;" indentlevel="1"> s.e. </td> <td style="text-align:right;"> 0.082 </td> <td style="text-align:right;"> 0.053 </td> <td style="text-align:right;"> 0.086 </td> <td style="text-align:right;"> 0.053 </td> <td style="text-align:right;"> 0.103 </td> <td style="text-align:right;"> 0.167 </td> <td style="text-align:right;"> 0.102 </td> </tr> <tr grouplength="2"><td colspan="8" style="border-bottom: 1px solid;"><strong>Inflation Rate (i=2)</strong></td></tr> <tr> <td style="text-align:left;padding-left: 2em;" indentlevel="1"> estimate </td> <td style="text-align:right;"> 0.047 </td> <td style="text-align:right;"> 0.044 </td> <td style="text-align:right;"> 0.027 </td> <td style="text-align:right;"> -0.070 </td> <td style="text-align:right;"> 1.250 </td> <td style="text-align:right;"> -0.097 </td> <td style="text-align:right;"> -0.164 </td> </tr> <tr> <td style="text-align:left;padding-left: 2em;" indentlevel="1"> s.e. </td> <td style="text-align:right;"> 0.042 </td> <td style="text-align:right;"> 0.027 </td> <td style="text-align:right;"> 0.043 </td> <td style="text-align:right;"> 0.027 </td> <td style="text-align:right;"> 0.052 </td> <td style="text-align:right;"> 0.085 </td> <td style="text-align:right;"> 0.052 </td> </tr> </tbody> </table> ] --- # Testing in-sample Granger causality .right-column[ Consider a bivariate VAR(p): `$$\begin{aligned} x_{1t} &= \alpha_1 + \pi_{11}^{(1)} x_{1t-1} + \cdots + \pi_{11}^{(p)} x_{1t-p} \\ &+ \pi_{12}^{(1)} x_{2t-1} + \cdots + \pi_{12}^{(p)} x_{2t-p} +\varepsilon_{1t} \\ x_{2t} &= \alpha_1 + \pi_{21}^{(1)} x_{1t-1} + \cdots + \pi_{21}^{(p)} x_{1t-p} \\ &+ \pi_{22}^{(1)} x_{2t-1} + \cdots + \pi_{22}^{(p)} x_{2t-p} +\varepsilon_{2t} \end{aligned}$$` - `\(\{X_2\}\)` does not Granger cause `\(\{X_1\}\)` if `\(\pi_{12}^{(1)}=\cdots=\pi_{12}^{(p)}=0\)` - `\(\{X_1\}\)` does not Granger cause `\(\{X_2\}\)` if `\(\pi_{21}^{(1)}=\cdots=\pi_{21}^{(p)}=0\)` ] --- # Testing in-sample Granger causality .right-column[ <table class="myTable"> <thead> <tr> <th style="text-align:left;"> </th> <th style="text-align:left;"> F </th> <th style="text-align:left;"> Pr(>F) </th> </tr> </thead> <tbody> <tr> <td style="text-align:left;"> Inflation Rate GC Interest Rate </td> <td style="text-align:left;"> 2.825 </td> <td style="text-align:left;"> 0.039 </td> </tr> <tr> <td style="text-align:left;"> Interest Rate GC Inflation Rate </td> <td style="text-align:left;"> 5.223 </td> <td style="text-align:left;"> 0.002 </td> </tr> </tbody> </table> ] --- # One-step-ahead forecasts .right-column[ Consider realizations of dependent variables from a bivariate VAR(1): `$$\begin{aligned} x_{1t+1} &= \alpha_1 + \pi_{11} x_{1t} + \pi_{12} x_{2t} + \varepsilon_{1t+1} \\ x_{2t+1} &= \alpha_2 + \pi_{21} x_{1t} + \pi_{22} x_{2t} + \varepsilon_{2t+1} \end{aligned}$$` Point forecasts are: `$$\begin{aligned} x_{1t+1|t} &= E(x_{1t+1}|\Omega_t) = \alpha_1 + \pi_{11} x_{1t} + \pi_{12} x_{2t} \\ x_{2t+1|t} &= E(x_{2t+1}|\Omega_t) = \alpha_2 + \pi_{21} x_{1t} + \pi_{22} x_{2t} \end{aligned}$$` ] --- # One-step-ahead forecasts .right-column[ Forecast errors are: `$$\begin{aligned} e_{1t+1} &= x_{1t+1} - x_{1t+1|t} = \varepsilon_{1t+1} \\ e_{2t+1} &= x_{2t+1} - x_{2t+1|t} = \varepsilon_{2t+1} \end{aligned}$$` Forecast variances are: `$$\begin{aligned} \sigma_{1t+1}^2 &= E(x_{1t+1} - x_{1t+1|t}|\Omega_t)^2 = E(\varepsilon_{1t+1}^2) = \sigma_{1}^2 \\ \sigma_{2t+1}^2 &= E(x_{2t+1} - x_{2t+1|t}|\Omega_t)^2 = E(\varepsilon_{2t+1}^2) = \sigma_{2}^2 \end{aligned}$$` ] --- # Multi-step-ahead forecasts .right-column[ Realizations of dependent variables in period `\(t+h\)`: `$$\begin{aligned} x_{1t+h} &= \alpha_1 + \pi_{11} x_{1t+h-1} + \pi_{12} x_{2t+h-1} + \varepsilon_{1t+h} \\ x_{2t+h} &= \alpha_2 + \pi_{21} x_{1t+h-1} + \pi_{22} x_{2t+h-1} + \varepsilon_{2t+h} \end{aligned}$$` Point forecasts are: `$$\begin{aligned} x_{1t+h|t} &= E(x_{1t+1}|\Omega_t) = \alpha_1 + \pi_{11} x_{1t+h-1|t} + \pi_{12} x_{2t+h-1|t} \\ x_{2t+h|t} &= E(x_{2t+1}|\Omega_t) = \alpha_2 + \pi_{21} x_{1t+h-1|t} + \pi_{22} x_{2t+h-1|t} \end{aligned}$$` ] --- # Multi-step-ahead forecasts .right-column[ Forecast errors are: `$$\begin{aligned} e_{1t+h} &= x_{1t+h} - x_{1t+h|t} = \pi_{11} e_{1t+h-1} + \pi_{12} e_{2t+h-1} + \varepsilon_{1t+h} \\ e_{2t+h} &= x_{2t+h} - x_{2t+h|t} = \pi_{21} e_{1t+h-1} + \pi_{22} e_{2t+h-1} + \varepsilon_{2t+h} \end{aligned}$$` Forecast variances are the functions of error variances and covariances, and the model parameters. ] --- # Forecasting interest rate .right-column[ <!-- --> ] --- # Forecasting inflation rate .right-column[ <!-- --> ] --- # Out-of-Sample Granger Causality .right-column[ The previously discussed (in sample) tests of causality in Granger sense are frequently performed in practice, but the 'true spirit' of such test is to assess the ability of a variable to help predict another variable in an out-of-sample setting. ] --- # Out-of-Sample Granger Causality .right-column[ Consider restricted and unrestricted information sets: `$$\begin{aligned} &\Omega_{t}^{(r)} \equiv \Omega_{t}(X_1) = \{x_{1,t},x_{1,t-1},\ldots\} \\ &\Omega_{t}^{(u)} \equiv \Omega_{t}(X_1,X_2) = \{x_{1,t},x_{1,t-1},\ldots,x_{2,t},x_{2,t-1},\ldots\} \end{aligned}$$` Following Granger's definition of causality: `\(\{X_2\}\)` is said to cause `\(\{X_1\}\)` if `\(\sigma_{x_1}^2\left(\Omega_{t}^{(u)}\right) < \sigma_{x_1}^2\left(\Omega_{t}^{(r)}\right)\)`, meaning that we can better predict `\(X_1\)` using all available information on `\(X_1\)` and `\(X_2\)`, rather than that on `\(X_1\)` only. ] --- # Out-of-Sample Granger Causality .right-column[ Let the forecasts based on each of the information sets be: `$$\begin{aligned} &x_{1t+h|t}^{(r)} = E\left(x_{1t+h}|\Omega_{t}^{(r)}\right) \\ &x_{1t+h|t}^{(u)} = E\left(x_{1t+h}|\Omega_{t}^{(u)}\right) \end{aligned}$$` ] --- # Out-of-Sample Granger Causality .right-column[ For these forecasts, the corresponding forecast errors are: `$$\begin{aligned} & e_{1t+h}^{(r)} = x_{1t+h} - x_{1t+h|t}^{(r)}\\ & e_{1t+h}^{(u)} = x_{1t+h} - x_{1t+h|t}^{(u)} \end{aligned}$$` The out-of-sample forecast errors are then evaluated by comparing the loss functions based on these forecasts errors. ] --- # Out-of-Sample Granger Causality .right-column[ For example, assuming quadratic loss, and `\(P\)` out-of-sample forecasts: `$$\begin{aligned} RMSFE^{(r)} &= \sqrt{\frac{1}{P}\sum_{s=1}^{P}\left(e_{1R+s|R-1+s}^{(r)}\right)^2} \\ RMSFE^{(u)} &= \sqrt{\frac{1}{P}\sum_{s=1}^{P}\left(e_{1R+s|R-1+s}^{(u)}\right)^2} \end{aligned}$$` where `\(R\)` is the size of the (first) estimation window. `\(\{X_2\}\)` causes `\(\{X_1\}\)` *in Granger sense* if `\(RMSFE^{(u)} < RMSFE^{(r)}\)`. ] --- # Out-of-Sample Granger Causality .right-column[ In the 'interest rate' equation, the RMSFE of the unrestricted model (0.1742) is greater than the RMSFE of the restricted model (0.1519), thus indicating that inflation rates do not out-of-sample Granger cause interest rates. In the 'inflation rate' equation, the RMSFE of the unrestricted model (0.2097) is less than the RMSFE of the restricted model (0.2128), thus providing evidence that interest rates out-of-sample Granger cause inflation rates. ] --- # Readings .pull-left[  ] .pull-right[ Ubilava, [Chapter 7](https://davidubilava.com/forecasting/docs/vector-autoregression.html) Gonzalez-Rivera, Chapter 11 Hyndman & Athanasopoulos, [12.3](https://otexts.com/fpp3/VAR.html) ]