Tutorial 8: Threshold Autoregression

In this tutorial, we will generate regime-dependent series, we will apply a grid-search method to obtain the threshold parameter, we will obtain and compare one-step-ahead forecasts from competing models using a rolling window procedure, and we will apply bootstrap resampling method to generate multi-step-ahead forecasts from a threshold regression. To run the code, the data.table and ggplot2 packages need to be installed and loaded.

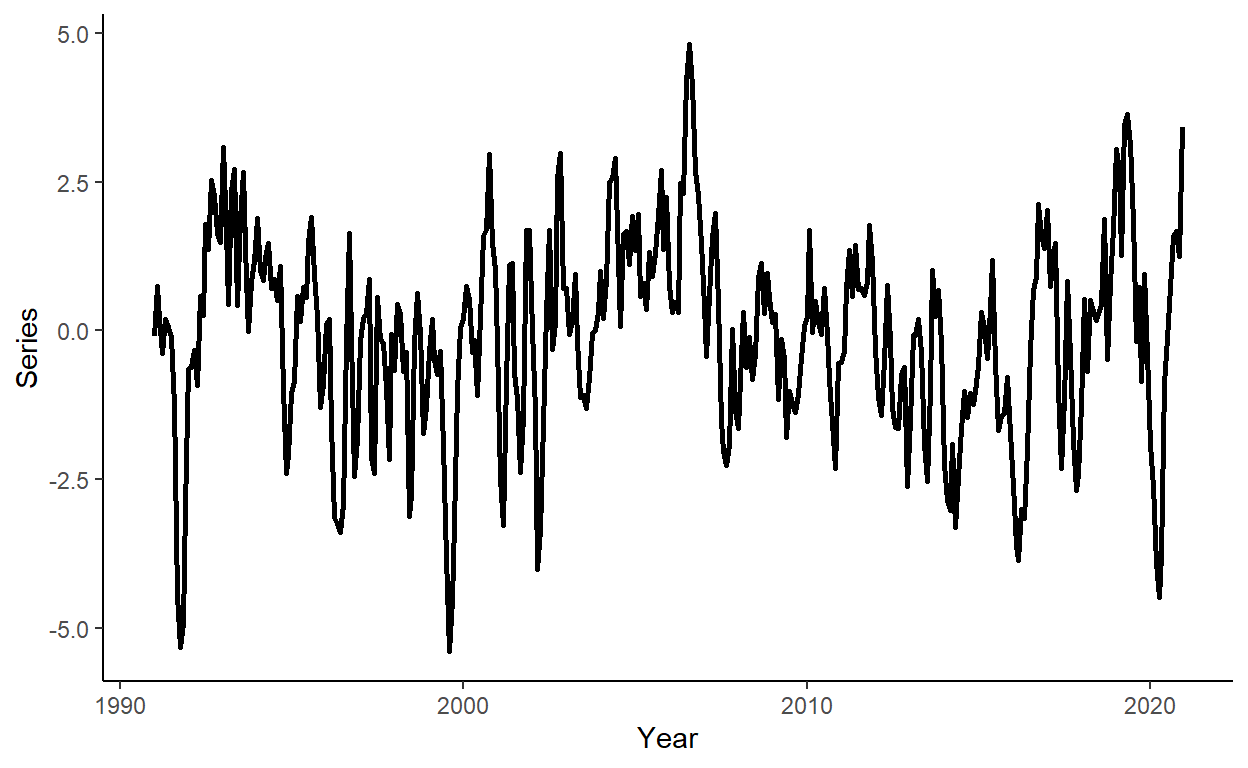

Let’s generate a time series that follow a TAR(2) process of the following form: \[y_t = \left\{\begin{array} {ll} 0.9y_{t-1} + \varepsilon_t & \text{if}~~y_{t-1}\ge0 \\ 1.2y_{t-1}-0.5y_{t-2} + \varepsilon_t & \text{if}~~y_{t-1} < 0 \end{array}\right. \] where \(e_{t} \sim N(0,\sigma^2)\). This suggests that the dynamics of the time series change depending on the sign of the lagged dependent variable.

n <- 360

set.seed(8)

e <- rnorm(n,0,1)

y <- rep(NA,n)

y[1] <- e[1]

if(y[1]>=0){

y[2] <- 0.9*y[1]+e[2]

}else{

y[2] <- 1.2*y[1]+e[2]

}

for(i in 3:n){

if(y[i-1]>=0){

y[i] <- 0.9*y[i-1]+e[i]

}else{

y[i] <- 1.2*y[i-1]-0.5*y[i-2]+e[i]

}

}Generate a vector of some arbitrary dates (e.g., suppose we deal with the monthly series beginning from January 1991), and store these along with \(y\) in a data.table, call it ‘dt’. Then plot the realized time series using ggplot function.

date <- seq(as.Date("1991-01-01"),by="month",along.with=y)

dt <- data.table(date,y)

ggplot(dt,aes(x=date,y=y))+

geom_line(size=1)+

labs(x="Year",y="Series")+

theme_classic()

Obtain the AIC and SIC for AR(p), \(p=1,\ldots,4\); decide on the optimal lag length based on AIC.

dt[,`:=`(y1=shift(y),y2=shift(y,2),y3=shift(y,3),y4=shift(y,4))]

dt <- dt[complete.cases(dt)]

ic_dt <- data.table(k=c(1:4),AIC=as.numeric(NA),SIC=as.numeric(NA))

for(i in 1:nrow(ic_dt)){

fmla <- as.formula(paste("y",paste0("y",c(1:i),collapse="+"),sep="~"))

reg_ar <- lm(fmla,data=dt)

ic_dt$AIC[i] <- log(crossprod(reg_ar$residuals))+2*(i+1)/nrow(dt)

ic_dt$SIC[i] <- log(crossprod(reg_ar$residuals))+log(nrow(dt))*(i+1)/nrow(dt)

}

ic_dt## k AIC SIC

## 1: 1 6.030794 6.052564

## 2: 2 5.987318 6.019972

## 3: 3 5.989787 6.033326

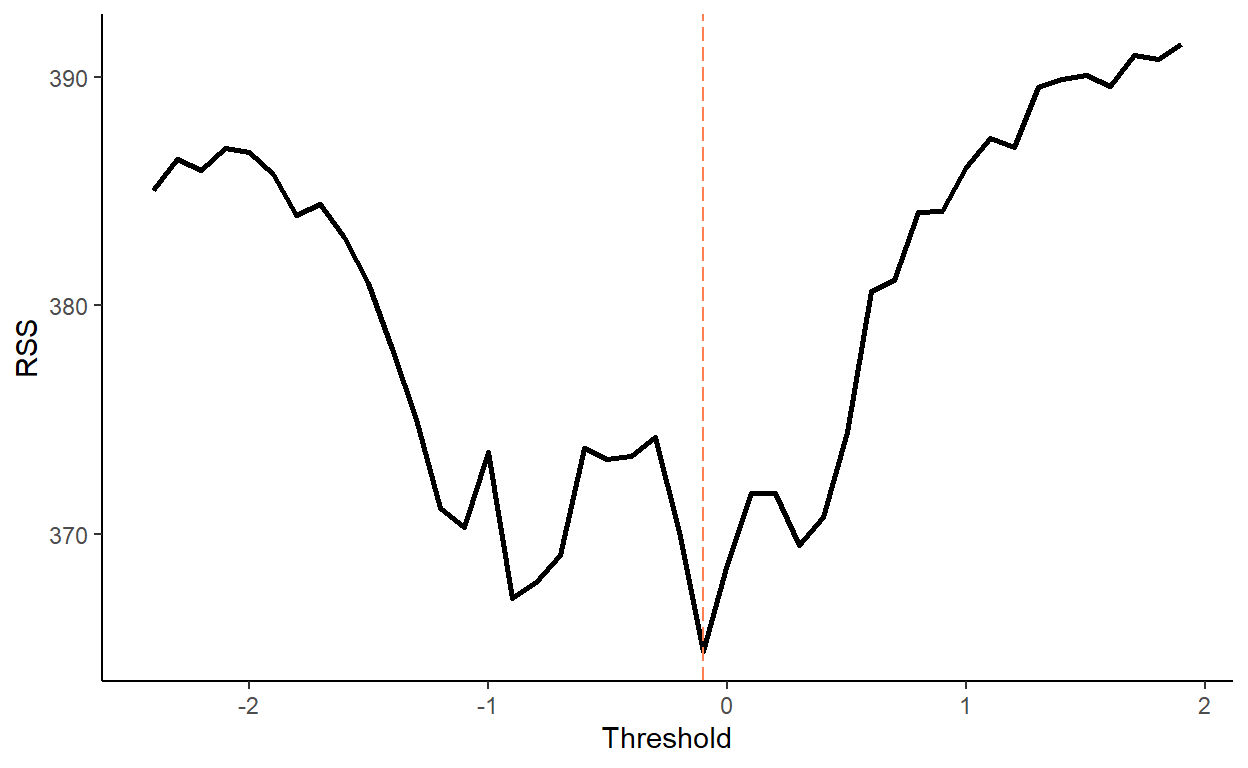

## 4: 4 5.994844 6.049268We now need to guess the threshold parameter (i.e., the value at which the switch between the regimes happens). For that, we perform a grid-search routine. We will consider a range of candidate thresholds that are within 10th and 90th percentiles of the lagged dependent variable. For each candidate threshold, we will run an OLS and calculate the residual sums of squares. A threshold parameter that yields the smallest residual sum of squares will be the estimate. Plot the residual sums of squares against the candidate threshold parameters to illustrate the optimal threshold selection routine.

qy <- round(quantile(dt$y,c(.1,.9)),1)

tr <- seq(qy[1],qy[2],by=.1)

grid_dt <- data.table(tr,ssr=NA)

grid_dt[,`:=`(ssr=as.numeric(ssr))]

for(i in tr){

dt[,`:=`(d=ifelse(y1>=i,1,0))]

tar <- lm(y~(y1+y2):I(d)+(y1+y2):I(1-d),data=dt)

grid_dt[tr==i]$ssr <- crossprod(tar$residuals)

}

tr_hat <- grid_dt[ssr==min(ssr)]$tr

tr_hat## [1] -0.1ggplot(grid_dt,aes(x=tr,y=ssr))+

geom_line(size=1)+

geom_vline(xintercept=tr_hat,color="coral",linetype=5)+

labs(x="Threshold",y="RSS")+

theme_classic()

Estimate the threshold autoregression and compare the parameter estimates with the true parameters of the model.

##

## Call:

## lm(formula = y ~ (y1 + y2):I(d) + (y1 + y2):I(1 - d), data = dt)

##

## Residuals:

## Min 1Q Median 3Q Max

## -3.07702 -0.69964 0.01969 0.65595 2.47130

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 0.01150 0.08554 0.134 0.893

## y1:I(d) 0.70886 0.08895 7.969 2.26e-14 ***

## y2:I(d) 0.06424 0.07576 0.848 0.397

## y1:I(1 - d) 1.15232 0.07632 15.099 < 2e-16 ***

## y2:I(1 - d) -0.45200 0.06818 -6.630 1.27e-10 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.02 on 351 degrees of freedom

## Multiple R-squared: 0.6447, Adjusted R-squared: 0.6407

## F-statistic: 159.3 on 4 and 351 DF, p-value: < 2.2e-16Generate a sequence of one-step-ahead forecasts from TAR(2) using the rolling window scheme, where the first rolling window ranges from period 1 to period 240. For comparison, also generate the one-step-ahead forecasts from the AR(2) and the random walk models. Calculate the RMSFE measures for the three models.

R <- 240

P <- nrow(dt)-R

dt[,`:=`(rw=as.numeric(NA),ar=as.numeric(NA),tar=as.numeric(NA))]

for(i in 1:P){

ar <- lm(y~y1+y2,data=dt[i:(R-1+i)])

tar <- lm(y~(y1+y2):I(d)+(y1+y2):I(1-d),data=dt[i:(R-1+i)])

dt$rw[R+i] <- dt$y[R-1+i]

dt$ar[R+i] <- ar$coefficients[1]+ar$coefficients[2]*dt$y[R-1+i]+ar$coefficients[3]*dt$y[R-2+i]

if(dt$y[R-1+i]>=0){

dt$tar[R+i] <- tar$coefficients[1]+tar$coefficients[2]*dt$y[R-1+i]+tar$coefficients[3]*dt$y[R-2+i]

}else{

dt$tar[R+i] <- tar$coefficients[1]+tar$coefficients[4]*dt$y[R-1+i]+tar$coefficients[5]*dt$y[R-2+i]

}

}

dt[,`:=`(rw_e=y-rw,ar_e=y-ar,tar_e=y-tar)]

rmsfe_rw <- sqrt(mean(dt$rw_e^2,na.rm=T))

rmsfe_ar <- sqrt(mean(dt$ar_e^2,na.rm=T))

rmsfe_tar <- sqrt(mean(dt$tar_e^2,na.rm=T))

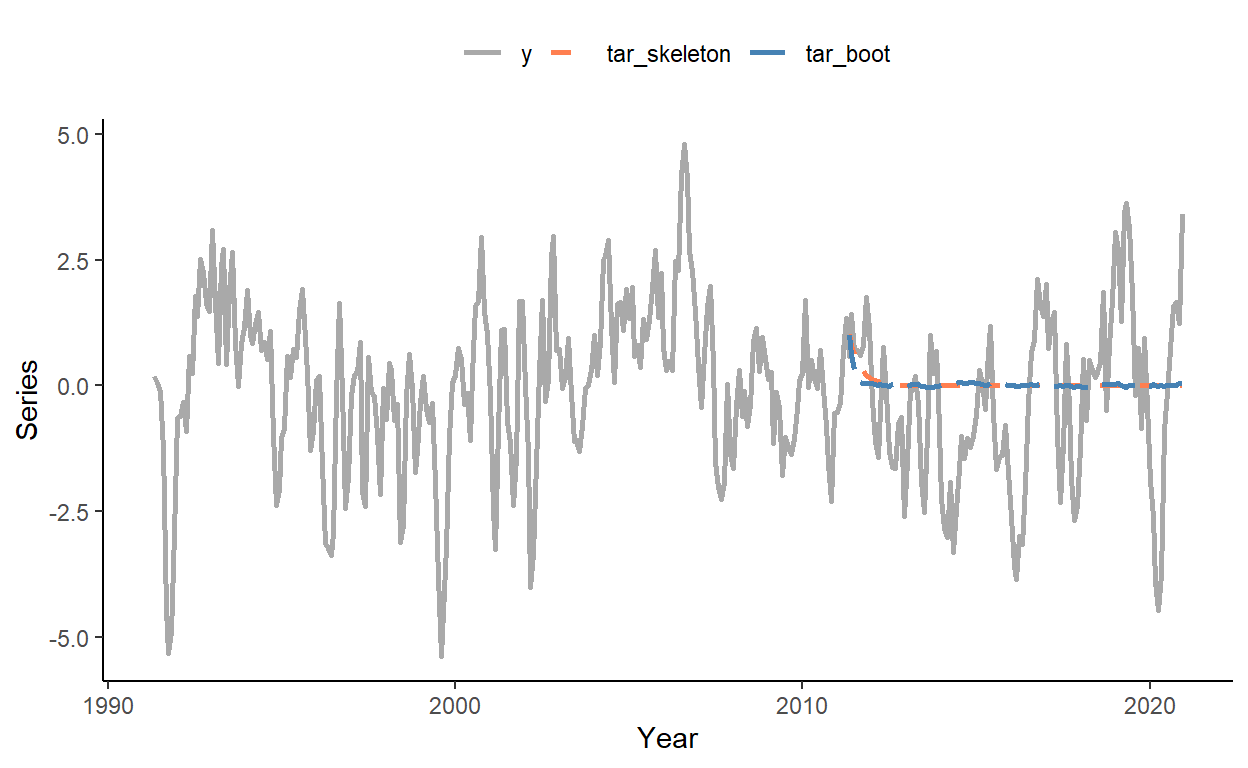

rmsfe_rw## [1] 1.091629## [1] 1.046419## [1] 1.024177Obtain the multi-step-ahead forecasts from period 241 onward using the so-called ‘skeleton extrapolation’ method (which yields biased forecasts) and the bootstrap resampling method (a numerical method that yields valid multi-step-ahead forecasts from nonlinear models). Plot the two forecasts along with the time series.

dt[,`:=`(tar_skeleton=y)]

tar <- lm(y~(y1+y2):I(d)+(y1+y2):I(1-d),data=dt[1:R])

for(i in 1:P){

if(dt$tar_skeleton[R-1+i]>=0){

dt$tar_skeleton[R+i] <- tar$coefficients[1]+tar$coefficients[2]*dt$tar_skeleton[R-1+i]+tar$coefficients[3]*dt$tar_skeleton[R-2+i]

}else{

dt$tar_skeleton[R+i] <- tar$coefficients[1]+tar$coefficients[4]*dt$tar_skeleton[R-1+i]+tar$coefficients[5]*dt$tar_skeleton[R-2+i]

}

}

dt[1:R]$tar_skeleton <- NA

B <- 5000 # the number of bootstrap simulations

boot_mat <- replicate(B,dt$y)

for(b in 1:B){

eps <- sample(tar$residuals,P,replace=T)

for(i in 1:P){

if(boot_mat[R-1+i,b]>=0){

boot_mat[R+i,b] <- tar$coefficients[1]+tar$coefficients[2]*boot_mat[R-1+i,b]+tar$coefficients[3]*boot_mat[R-2+i,b]+eps[i]

}else{

boot_mat[R+i,b] <- tar$coefficients[1]+tar$coefficients[4]*boot_mat[R-1+i,b]+tar$coefficients[5]*boot_mat[R-2+i,b]+eps[i]

}

}

}

dt$tar_boot <- rowMeans(boot_mat)

dt[1:R]$tar_boot <- NA

sub_lg <- melt(dt[,.(date,y,tar_skeleton,tar_boot)],id.vars="date")

ggplot(sub_lg,aes(x=date,y=value,color=variable,linetype=variable))+

geom_line(size=1,na.rm=T)+

scale_color_manual(values=c("darkgray","coral","steelblue"))+

scale_linetype_manual(values=c(1,2,5))+

labs(x="Year",y="Series")+

theme_classic()+

theme(legend.position="top",legend.title=element_blank())